News

Multi-View Augmented and Virtual Reality from a Drone Perspective

Augmented Reality (AR) is not only a popular topic nowadays – it also enjoys a plethora applications where users have access to controllable alternative viewing positions based on a device and its camera.

In the world of drones or unmanned aerial vehicles (UAVs), the viewing positions can again be based on a camera that is mounted on a drone – resulting in a system specification that defines and identifies the requirements of what’s known as multi-view AR.

Two authors from the University of Washington have recently published a study which focuses on different concepts around modern augmented and virtual reality (AR/VR) and drones. Their main aim is a camera mounted on a moveable base such as remote control vehicle – or a UAV to support the motion in all three axes and not be tethered allowing for a wide range of view controls, therefore serving as an excellent solution.

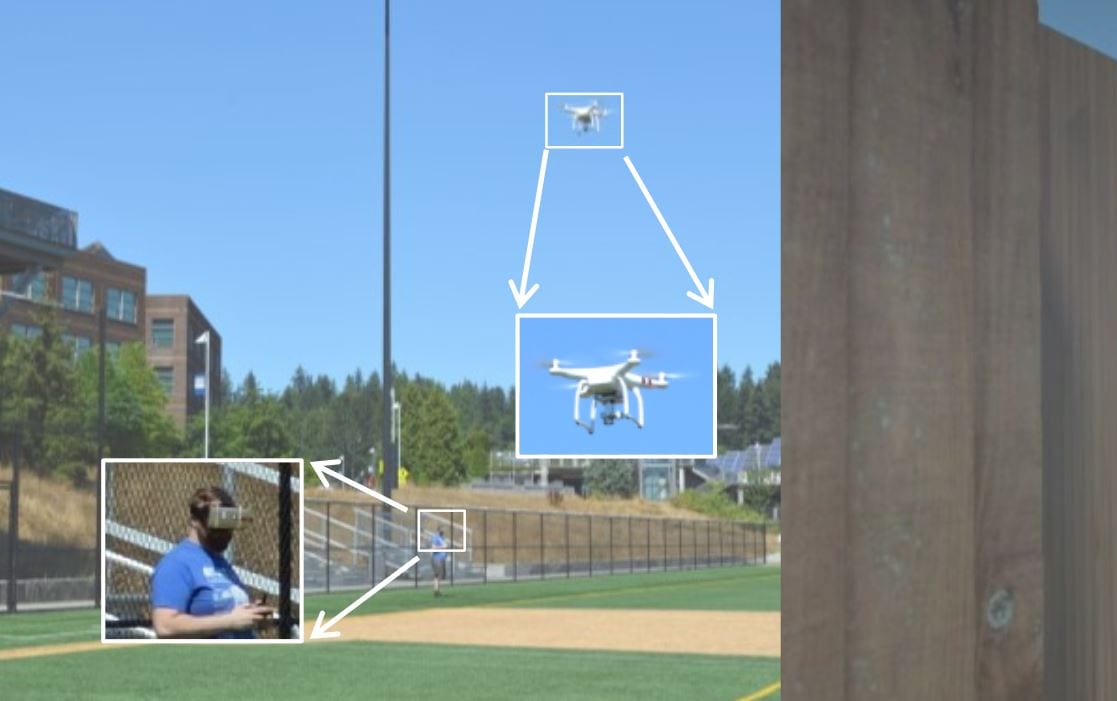

The AR Drone Maze Game. Left : User in open space navigating the maze with drone above. Center : First person view of the maze. Right : Third person (top – down) view of the player in the maze. The user may switch between the two views at will.

The authors propose an illustration which displays the rendered results (VR) of augments in the physical world – with the rendered results for the human eyes (AR) from the perspective of drones. They clearly push the benefits of drones in this field by stating:

“With the camera video feed displayed directly on the HMD, the traditional first person view can be achieved when the camera view parameters resemble those of the user’s eyes. When the camera view parameters then deviates, the user experiences viewing of 360-degree video1 in real-time as though located at the current camera position, or a remote first person view. When the user is visible in the camera view, it is as though the user is self-observing, or a third person view. A multi-view AR system should support predefined views, e.g., first and third person, and full manipulation of the drone/camera position and viewing directions.”

Using a DJI Drone in an ‘AR Drone Maze Game’

Designed and developed based on this system specification, the authors use a DJI drone in what’s called the ‘AR Drone Maze Game’ or an application that is designed by Lenovo to feature the drone camera control and the multi-view switching functionality. As such, the game allows the user to toggle between the device camera (in first person) and the drone video (in third person).

“The AR Drone Maze Game is designed as an engaging interactive application to explore the potentials of multi-view AR systems. Based on feedbacks from a small number of test subjects, even with the drifting localization results, the application is indeed appealing and real-time self-observing during maze navigation does indeed facilitate awareness of the surrounding environment,” the authors noted.

With this, the authors identify and specify the requirements of a multi-view AR application that is designed and developed as an infrastructure – and built a demonstration for a multi-view AR application. The AR Drone Maze Game therefore illustrates a lot of potential applications of the multi-view AR functionality – one of which can be on-site AR exploration of unbuilt structures.

Conclusion

In the final sections, the authors proudly state that their work is “part of the investigation of the Cross Reality Collaboration Sandbox Research Group 5 at the University of Washington Bothell where the group seeks to extend Collaborative Virtual Environments (CVE) with physical world and objects to support both AR and VR users collaborating in the same environment while at distinct geographic locations.“

Even though their presented results are still an infant step in the development of AR and VR for drones, this form of infrastructure paves the way towards improved navigation of drones – especially with users which utilize a third-person view and want a better perspective, potentially fostering remote collaboration with drones.

Citation: Aaron Hitchcock and Kelvin Sung. 2018. Multi-view augmented reality with a drone. In Proceedings of the 24th ACM Symposium on Virtual Reality Software and Technology (VRST ’18), Stephen N. Spencer (Ed.). ACM, New York, NY, USA, Article 108, 2 pages. DOI: https://doi.org/10.1145/3281505.3283397 – https://dl.acm.org/citation.cfm?id=3283397