Autonomous

Unreal Engine 4 Used to Simulate Critical Incidents and Data Collection by Autonomous Drones

Virtual simulations, for quite some time now, have been playing a major part in simulating real world situations and helping optimize those actual situations practically by tweaking the simulations. You might have heard of the infamous video game, Project Cars. The said video game allows customization of vehicles and therefore allows one to design actual cars based on their simulated performance in the video game.

Even though simulated environments are very common as far as engineering design and accident responses are considered, they are overlooked in serious but rare situations. What sort of situations? Japan, in 2011, faced a Nuclear meltdown in Fukushima Daiichi that caused 1 death, 37 people were injured and 2 were burnt by radiations. Although these instances are rare, their results and consequences can be catastrophic and since there aren’t many simulated or automated methodologies for analyses of the situation, investigation and data collection at sites affected by nuclear or radiological accidents is performed manually by trained personnel following certain protocol and Standard Operating Procedures (SOPs).

The research paper, Using A Game Engine To Simulate Critical Incidents And Data Collection By Autonomous Drones by David L. Smyth, Frank G. Glavin and Michael G. Madden from School of Computer Science National University of Ireland Galway, Ireland addresses this problem head on and formulates methods of data collection and analyses using autonomous drones that are trained by a virtual video game environment.

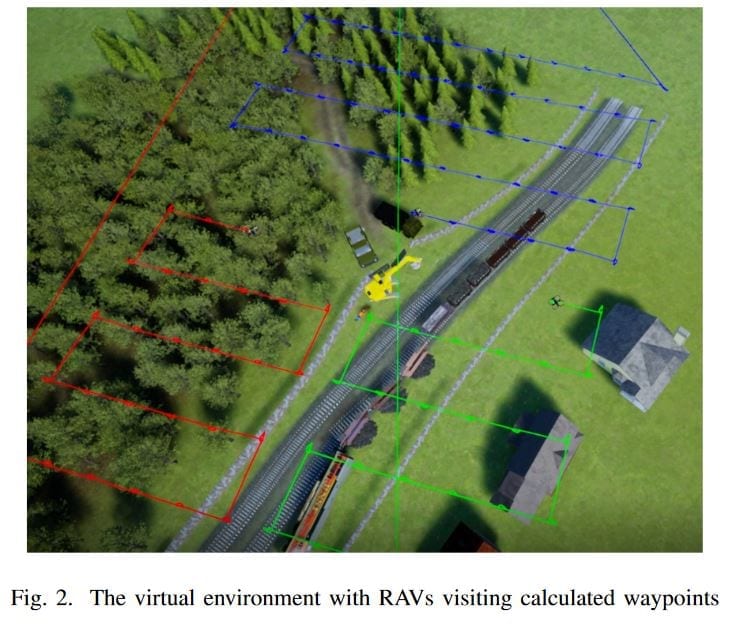

The researchers used the famous and commonly used Unreal Engine 4 (UE4), which is currently the most utilized game engine, being the foundation of most triple A titles in the modern gaming industry. The gaming engine was configured to allow control of autonomous drones very easily as with the integration of a plugin termed AirSim, the system would allow commands to objects in three dimensions; North, East and Down (NED). NED simplifies movement in 6 directions in a very convenient system.

The researchers explicitly state their reason for picking UE4:

- UE4 can develop near photo-realistic imagery assisting accurate image acquisition

- UE4 has available plugins that include Remote Aerial Vehicles (RAVs)

- UE4’s blueprint visual scripting system can allow for rapid prototyping

While there are countless different possible potential scenarios that can be faced in real life, the environment of each scenario takes a considerable amount of time and effort to simulate. The research team simulated the case of a train carrying radio-active material derailing as a result of some accident or malfunction.

Radiation simulated in virtual environment: As explained just a paragraph above, UE4 supports a visual scripting system known as blueprints, which is a game-play scripting system based on the concept of using a node-based interface to create game-play elements from within the UE4 Editor. A blueprint was created to simulate gamma radiation in the environment. Using the assumption that absolutely no energy is lost as the radiations pass through the propagating medium, the researchers from National University of Ireland were able to detect ionizing radiation strength. Similarly, it is possible to develop models of chemical and biological dispersion with a corresponding simulated detection. This allows decoupling the development of the decision support analysis upon detection of hazardous substances from the actual details of the sensors developed in the project, streamlining the development of AI systems.

AirSim: Following up to now, we are able to identify and detect harmful radiations using the blueprints available with Unreal Engine 4, but using what? How? We already established that we will be using autonomous drones or Unmanned Aerial Vehicles (UAVs) or Remote Aerial Vehicles (RAVs) as our inspectors. How do we operate these aerial vehicles? That’s where AirSim comes in. It is a plugin in UE4 that can be used to autonomously program RAVs. But AirSim is not the only fish in the sea. It was chosen by the research team for the following reasons:

- Flexibility in programming language used to develop the algorithms.

- One plugin used to operate multiple RAVs simultaneously.

- It is an open-source software that can be updated upon research improvements.

GPS: Autonomous understanding of location in 3d space is vital in the operation of autonomous vehicles; which is just what a Global Positioning System (GPS) module provides. As we have already described the working of NED (North – East – Down) system in the engine, the GPS module would initially set the values in NED as (0,0,0) setting the starting position, all the movement of the drone will be recorded with the notice of change in these coordinates.

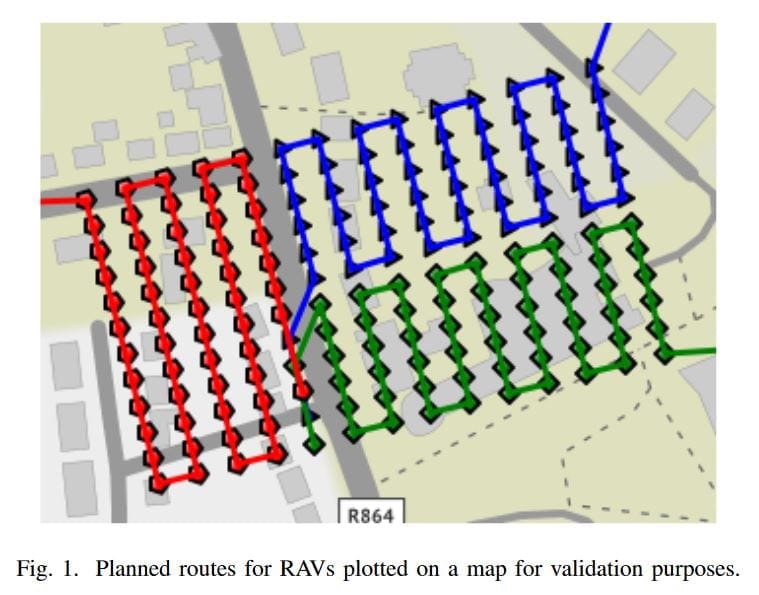

Image acquisition: The drones would scout an area affected by a hazardous situation following the protocol:

Identify the region that needs to be mapped.

- Divide the region into separate regions, the unified centre of these regions will define the point where an image will need to be taken to cover that region.

- Design an algorithm that will assign a set of points to each RAV. These sets should form a partition of the full set of points. The objective should be to minimize the time taken for the longest flight path.

- For each waypoint of the calculated RAV routes, collect an image with GPS position and camera and send all recorded images for analysis.

Conclusion: Using different plugins that were calibrated and programmed accordingly, the researchers were able to successfully create a simulated system that can formulate investigation procedures in practice, for rare accidental situations.

Citation: Using a Game Engine to Simulate Critical Incidents and Data Collection by Autonomous Drones, Authors: David L. Smyth, Frank G. Glavin, Michael G. Madden, eprint arXiv:1808.10784 | https://arxiv.org/pdf/1808.10784.pdf