News

Monitoring Human Behavior in Drone Videos

A new experimental study gearing towards the examination of human visual behavior during the observation of unmanned aerial objects (UAVs) has emerged, summing up the experimental performance that is based on the collection as well as quantitative and qualitative analysis of the eye tracking data used to observe drones.

The authors have submitted the work at the University of Nantes, France, mainly in order to set new standards in the observation of drones (and the influencing effects throughout the process).

The Use of UAVs and the Potential for Improvement

The paper introduces readers to the use of UAVs now – especially pointing out to drones that are linked with surveillance tasks as the “most perspective” and most “controversial” ones in the field.

As the authors put it:

“Over the last years, several applications and techniques have been proposed towards the process of visual moving target tracking based on computer vision algorithms. The higher goal of visual surveillance systems can be associated with the process of interpretation of existing patterns connected with moving objects of the field of view (FOV). “

They have also pointed out to the abilities of UAVs to simulate human visual behavior, understanding how UAVs videos are perceived by human vision and how much critical information they can deliver towards an improvement in the systems for observation.

According to the authors, there are two basic mechanisms that influence the process of visual attention – the “bottom-up” and “top-down” which are thought to operate on raw sensory input, as well as longer-term cognitive strategies, accordingly.

Based on these mechanisms and models, they study the visual attention and the performed eye movements – along with the validation of computational data, visual attention models and gaze data.

The Methodology Used in the Paper

One of the key processes that was used in the paper and examined in detail was the visual stimuli, based on the use of multiple videos adapted from the UAV123 Database. Performed in five successive sets, the authors went above and beyond in order to ensure the preciseness of the collected data.

They used the EyeLink 1000 Plus eye tracker system as well as a 23.8 inches computer monitor to observe the results and present the visual stimuli. Based on their data analysis, fixation detection, eye tracking metrics, data visualization (with heatmaps) as well as quantitative and qualitative analysis, they presented the results.

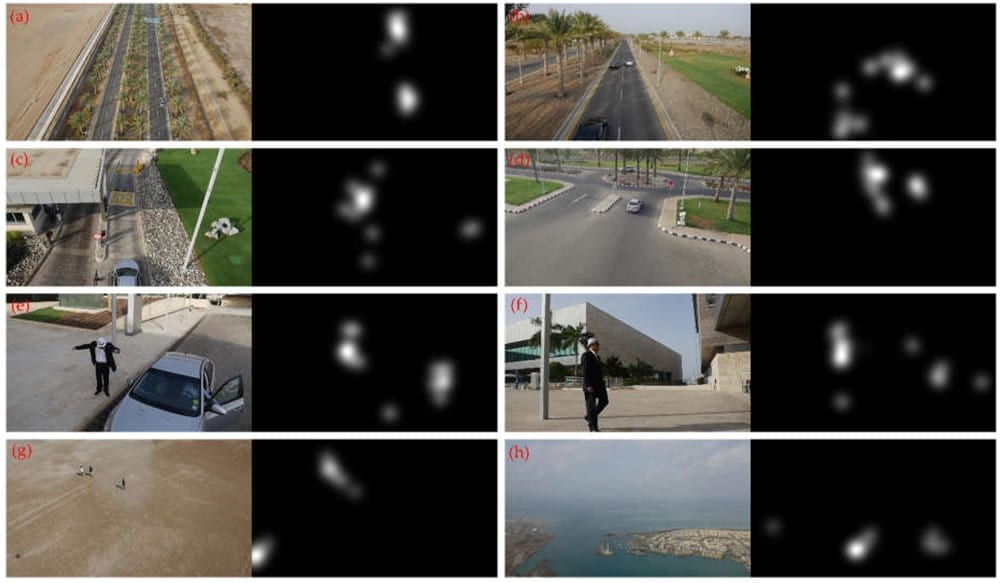

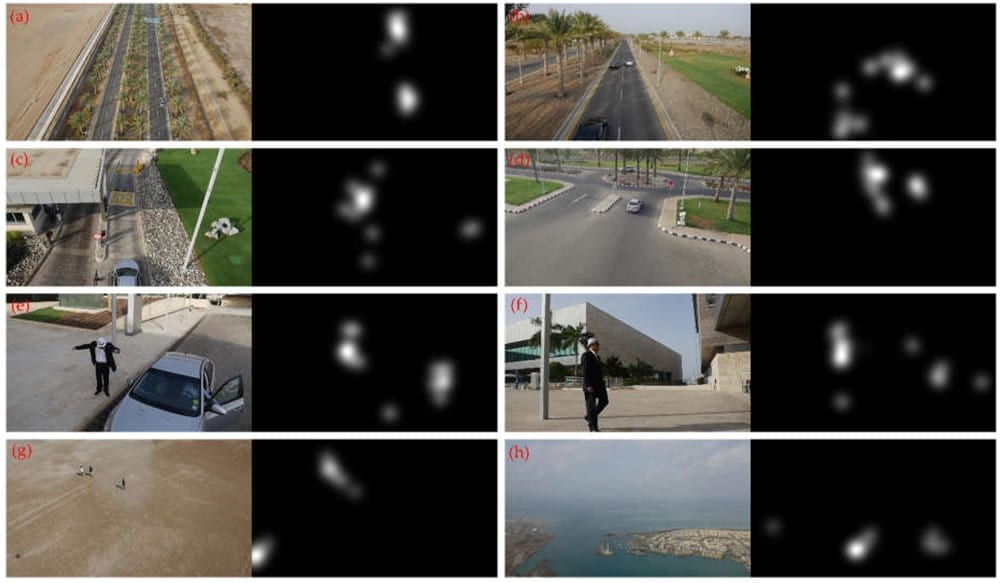

One of the conclusions was the one stating that “fixations durations are directly linked with the perceived complexity as well as with the level of information depicted in an observed visual scene.” while the heatmap visualizations showed that the “observers’ attention, even in a free viewing task, is drawn in scene objects which are characterized by the element of motion as well as in other features of the observed field of view with heterogenic elements.”

Sample frames of the 19 different videos selected from the UAV123 database and used as visual stimuli in the performed experimental study. The selected names of all videos are the same with these provided by the original source.

Sample (frames) heatmap visualizations; attention is drawn in a moving object (car) and in a street label (a), in an object of the scene with different color (red) from the surrounding environment (b), in a warning (road) sign with different color (yellow) from the surrounding environment (c), in the main and other moving objects (cars) as well as in street signs (d), in human actions (e), in human faces (f), in UAV’s shadow (g), and in buildings with a different shape than the surrounding ones (h).

General Conclusions from the Study

According to the results, it is the UAV’s flight altitude that serves as a dominant specification and affects the visual attention process, while the presence of sky in the video background seems to be the less affecting factor throughout the procedure.

From the findings, the author noted that the main surrounding environment and the main size of the observed objects as well as the main perceived angle between the UAV flight plain and ground are all having a vital role in the visual reaction of the observer during the exploration of the drone to this stimuli.

Thanks to heatmap visualizations, we can see the most salient locations in the used UAV videos and see that all of the data (including raw gaze data, fixation and saccade events) is distributed to the scientific community in an attempt to build a new dataset (dubbed ‘EyeTrackUAV’) that will act as an objective ground truth in all future studies.

Citation: “Monitoring Human Visual Behavior during the Observation of Unmanned Aerial Vehicles (UAVs) Videos”. Vassilios Krassanakis, Matthieu Perreira Da Silva and Vincent Ricordel, Drones 2018, 2(4), 36; doi:10.3390/drones2040036 – https://www.mdpi.com/2504-446X/2/4/36/htm