News

Camera Drone with RGB and Thermal Cameras for Optical Sectioning

Drone technology combined with artificial intelligence (AI) and other sophisticated tech can be the solution to several modern day technological challenges. With airborne optical sectioning (AOS) for example, camera drones are applied for synthetic aperture imaging. By computationally removing occluding vegetation or trees when inspecting the ground surface, AOS supports various applications in archaeology, forestry, and agriculture.

Synthetic apertures (SA) find applications in many fields, such as radar, radio telescopes, microscopy, sonar, ultrasound, LiDAR and optical imaging.

In a recent study published in the journal MDPI Remote Sensing was conducted to explore how to design synthetic aperture sampling patterns and sensors in an optimal and practically efficient way, researchers derived a statistical model that reveals practical limits to both synthetic aperture size and number of samples for the application of occlusion removal.

AOS is not only cheaper compared to alternative airborne scanning technologies (such as LiDAR), but also delivers surface colour information, achieves higher sampling resolutions, and (in contrast to photogrammetry) it does not suffer from inaccurate correspondence matches and long processing times. Rather than to measure, compute, and render 3D point clouds or triangulated 3D meshes, AOS applies image-based rendering for 3D visualization. However, AOS, like photogrammetry, is passive (it only receives reflected light and does not emit electromagnetic or sound waves to measure the backscattered signal). Thus, it relies on an external energy source (i.e., sunlight).

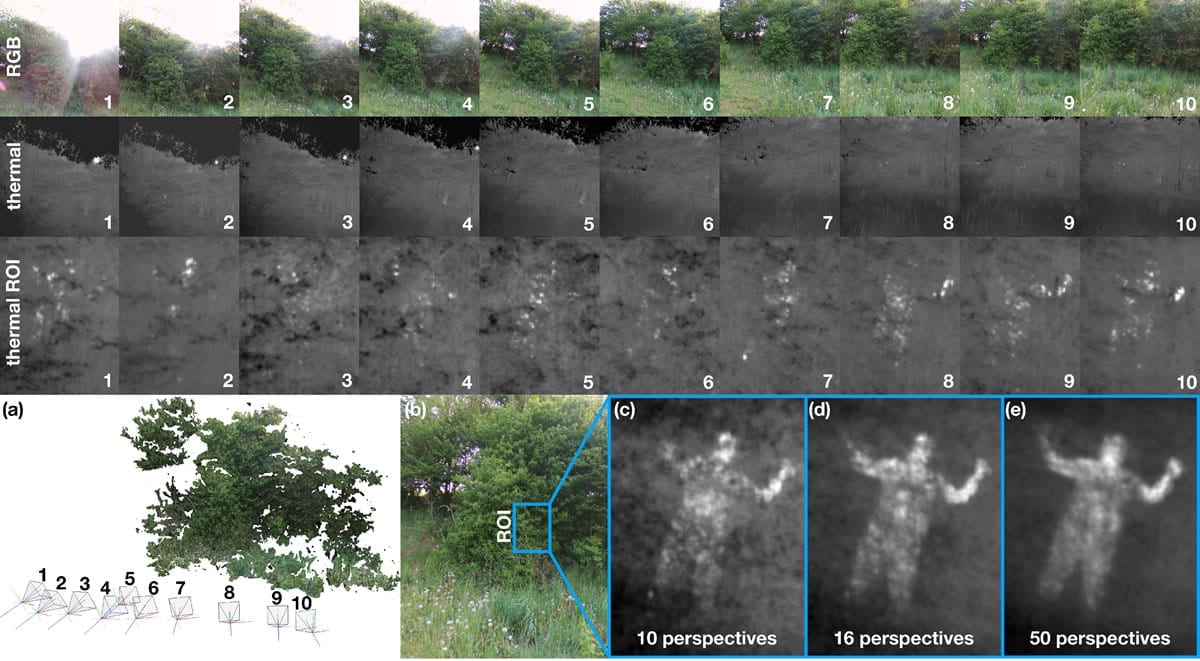

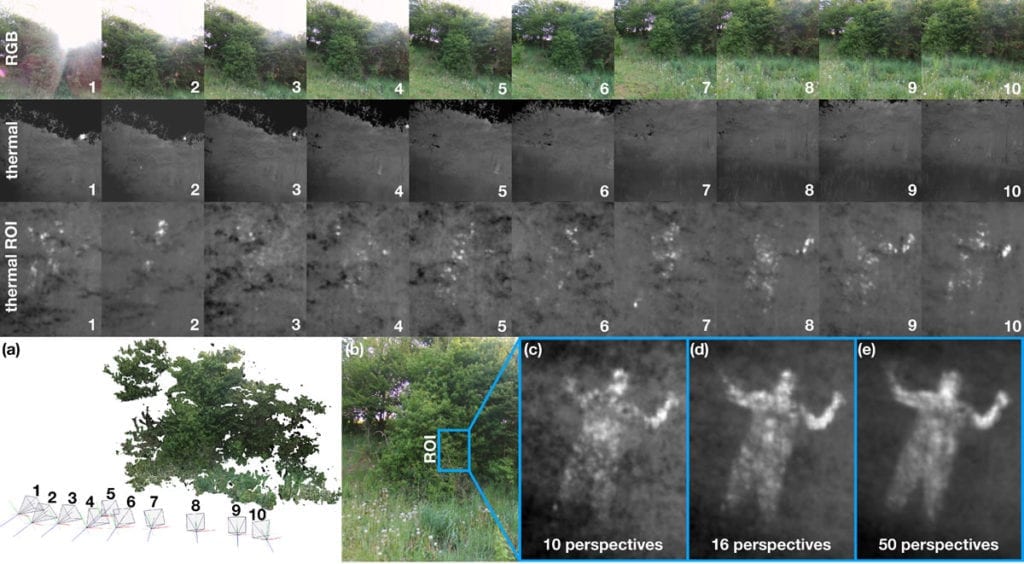

RGB images (first row) and corresponding tone-mapped thermal images (second row), close-up of thermal images within region of interest ROI (third row), results of pose estimation and 3D reconstruction (a), RGB image from pose 5 with indicated ROI (b), TAOS rendering results of ROI for 10 perspectives (c), 16 perspectives (d), and 50 perspectives (e). See also supplementary video.

Summarizing their findings in an article the researchers presented first results of AOS being applied to thermal imaging in outdoor environments. The radiated heat signal of strongly occluded targets, such as human bodies hidden in dense shrub, can be made visible by integrating multiple thermal recordings from slightly different perspectives, while being entirely invisible in RGB recordings or unidentifiable in single thermal images.

The team refers to the extension of AOS to thermal imaging as Thermal Airborne Optical Sectioning (TAOS). In contrast to AOS, TAOS does not rely on the reflection of sunlight from the target, which is low in case of dense occlusion. Instead, it collects bits of heat radiation through the occluder volume over a wide synthetic aperture range and computationally combines them to a clear image. This crucial process requires precise estimation of the drone’s position and orientation for each capturing pose.

Since GPS, internal inertia sensors, and compass modules or pose estimation from low resolution thermal images are too imprecise and light-weight thermal cameras have very low resolutions, an additional high-resolution RGB camera is used to support accurate pose estimation based on computer vision. TAOS might find applications in areas where classical airborne thermal imaging is already used, such as search-and-rescue, animal inspection, forestry, agriculture, or border control. It enables the penetration of densely occluding vegetation.

RGB images (first row) and corresponding tone-mapped thermal images (second row), close-up of thermal images within region of interest ROI (third row), results of pose estimation and 3D reconstruction (a), RGB image from pose 5 with indicated ROI (b), TAOS rendering results of ROI for 10 perspectives (c), 16 perspectives (d), and 50 perspectives (e). See also supplementary video.

Materials and Methods:

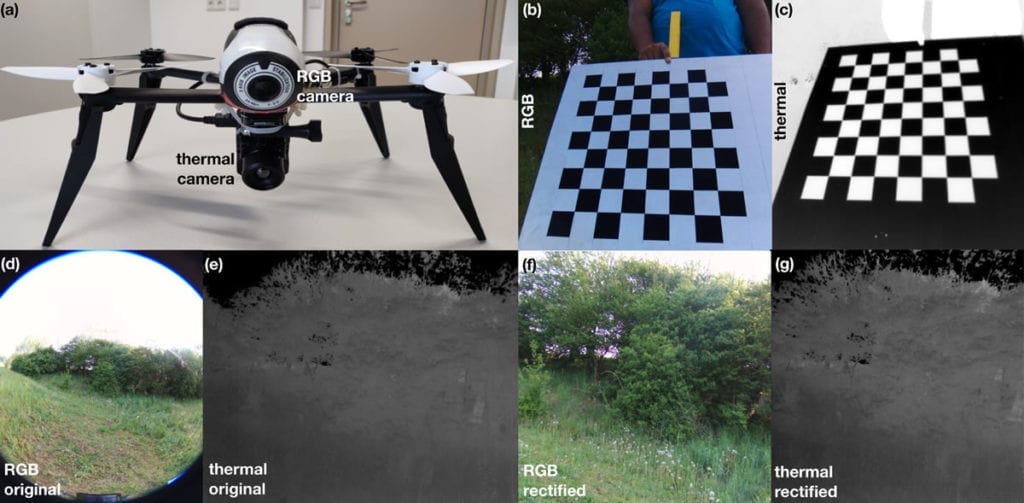

The drone used for the experiment was a Parrot Bebop 2 (Parrot Drones SAS, Paris, France) with an internal RGB camera (14 MP CMOS sensor, fixed 178 ∘ FOV fisheye lens, 8 bit per color channel). The team said it rectified and cropped the original image and downscaled the resolution to 1024×1024 pixels for performance reasons. They attached an external thermal camera at the drone’s vertical gravity axis (a FLIR Vue Pro; FLIR Systems, Inc. Wilsonville, Oregon, USA; 640×512 pixels rectified and cropped to 512×512 pixels; fixed 81 ∘ FOV lens; 14 bit for a 7.5–13.5μm spectral band). Both cameras are rectified to the same field of view of 53 ∘.

For pose estimation, the general-purpose structure-from-motion and multi-view stereo pipeline, COLMAP (https://colmap.github.io), was used. Image integration was implemented on the GPU, based on Nvidia’s CUDA.

Discussion:

TAOS has the potential to increase depth penetration through densely occluding vegetation for airborne thermal imaging applications. Fast update rates are required for many such applications that inspect moving targets (such as search-and-rescue, animal inspection, or border control).

Compared to direct thermal imaging, TAOS introduces an additional processing overhead for image rectification, pose estimation, and image integration.

The team timed approximately 8.5 s for all N = 10 images together (i.e., on average 850 ms per image). Real-time pose estimation, image rectification, and rendering techniques will reduce this overhead at least to 107 ms per image. Thus, if direct thermal imaging delivers recordings at 10 fps, TAOS (for N = 10) would deliver at approximately 7 fps for 512×512 pixels—causing a slightly larger pose estimation error (0.49 pixels on the sensor of the thermal camera). However, TAOS images integrate a history of N frames.

In this case it means that while direct thermal imaging integrates over 100 ms (1 frame), TAOS integrates over 1 s (N = 10 frames). Moving targets might result in motion blur that can lead to objects being undetectable during TAOS integration (depending on the object’s size and position in the scene). TAOS can compensate for known object motion, so that moving objects can be focused during integration.

Conclusions:

The team concluded that for AOS recordings they were able to achieve an approximately 6.5–12.75 times reduction of the synthetic aperture area and an approximately 10–20 times lower number of samples without significant loss in visibility.

Synthetic aperture imaging has great potential for occlusion removal in different application domains. In the visible range, it was used for making archaeological discoveries. In the infrared range, it might find applications in search-and-rescue, animal inspection, or border control. Using camera arrays instead of moving single cameras that sequentially scan over a wide aperture range would avoid processing overhead due to pose estimation and motion blur caused by moving targets, but would also be more complex, cumbersome, and less flexible. The team would like to investigate the possibilities of 3D reconstruction by performing thermal epipolar slicing (e.g., as in for the visual domain). Investigating the potential AOS together with other spectral bands for other applications, such as in forestry and agriculture, will be part of their future work.

Citation: Thermal Airborne Optical Sectioning, Indrajit Kurmi, David C. Schedl and Oliver Bimber, Institute of Computer Graphics, Johannes Kepler University Linz, 4040 Linz, Austria, Remote Sens. 2019, 11(14), 1668; https://doi.org/10.3390/rs11141668, https://www.mdpi.com/2072-4292/11/14/1668