Simulating Real-Time Hyperspectral Processing Algorithms On-Board Drones

Hyperspectral imaging technology has been gaining popularity over the years primarily due to its ability to provide detailed imaging that provides subtle differences which remain mostly uncovered by other optical devices.

The latest hyperspectral sensors have substantially reduced in size and have reached very competitive prices making it the sought after choice in applications such as defense, security, mineral identification, and environmental protection, among others. Hyperspectral technology expansion in tandem with unmanned aerial vehicles (UAVs) have opened a new era in remote sensing, enabling the development of ad-hoc platforms at a much lower price, with some relevant advantages compared to satellites and airplanes. These platforms have the potential of performing missions that provide results in real time, making hyperspectral imaging most ideal for specific monitoring and surveillance applications which require a prompt reaction to a stimulus. However, the use of such autonomous systems equipped with hyperspectral sensors poses a new challenge for researchers and developers, not only adapting the algorithm implementations to be able to work in real-time as images are being captured, but also making the appropriate modifications to let it coexist with the autonomous system control.

A research team of scientists which includes Pablo Horstrand, José Fco. López, Sebastián López, Tapio Leppälampi, Markku Pusenius and Martijn Rooker from the Institute for Applied Microelectronics (IUMA), University of Las Palmas de GC (ULPGC), in Spain and Creanex Oy in Finland and R&D Projects Department, TTTech Computertechnik AG, from Austria is working on these challenges. The contribution of their work consists in bringing in a novel intermediate phase for testing and validating the behaviour of hyperspectral image processing algorithms for real-time systems, based on a simulation environment that provides a faithful virtual representation of the real world. The new proposal in this work hopes to significantly shorten the gap between the offline image processing algorithm implementation and its use in a real scenario.

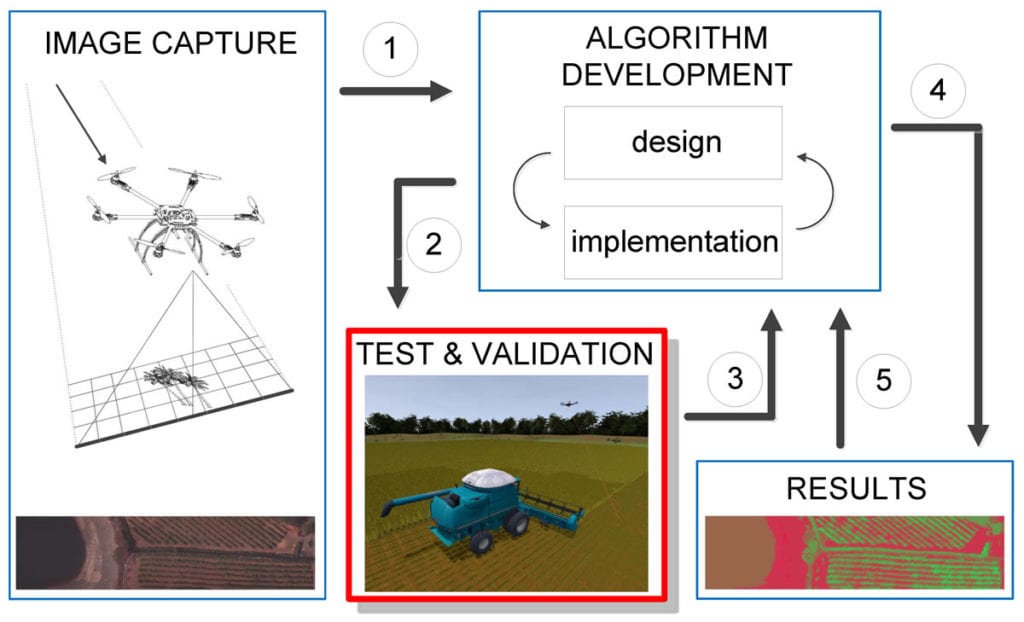

Proposed validation and verification strategy.

Currently, the algorithm development process starts with a design phase in which the target application needs is analysed and possible solutions defined. This is followed by the implementation phase where the solution is coded into a high level language to rapidly have results available and start looking for the most suitable solution. Usually this latter phase is carried out offline, with synthetic or real hyperspectral images downloaded from public repositories. The main problem begins when the image processing algorithms are transferred to the target device and the real system is brought to the field to run test campaigns. Aspects overlooked both in the design and implementation phase carried out offline cause the system to not perform as expected. It is then that a back and forth process starts in order to try and accomplish the desired results with the algorithm, incurring unforeseen delays and extra costs.

In their paper the researchers detailed how they based the research work on a pushbroom hyperspectral camera that presently constitutes one of the most widely chosen sensors to be mounted on a UAV due to its trade off between spatial and spectral resolution. The integration of this type of camera into a UAV is quite a complex task since the captured frames are individual lines that share no overlapping, and hence, much more effort has to be put into the acquisition and processing stages in order to generate a 2D image.

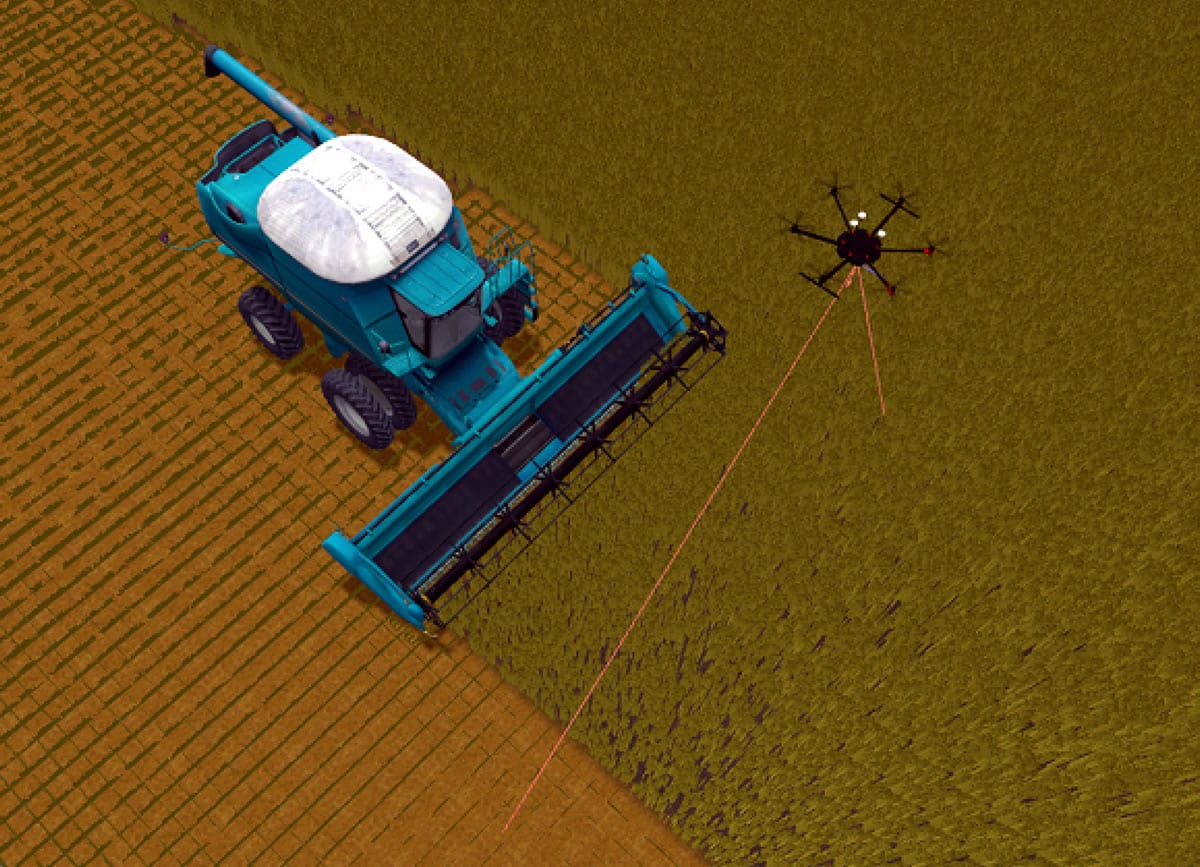

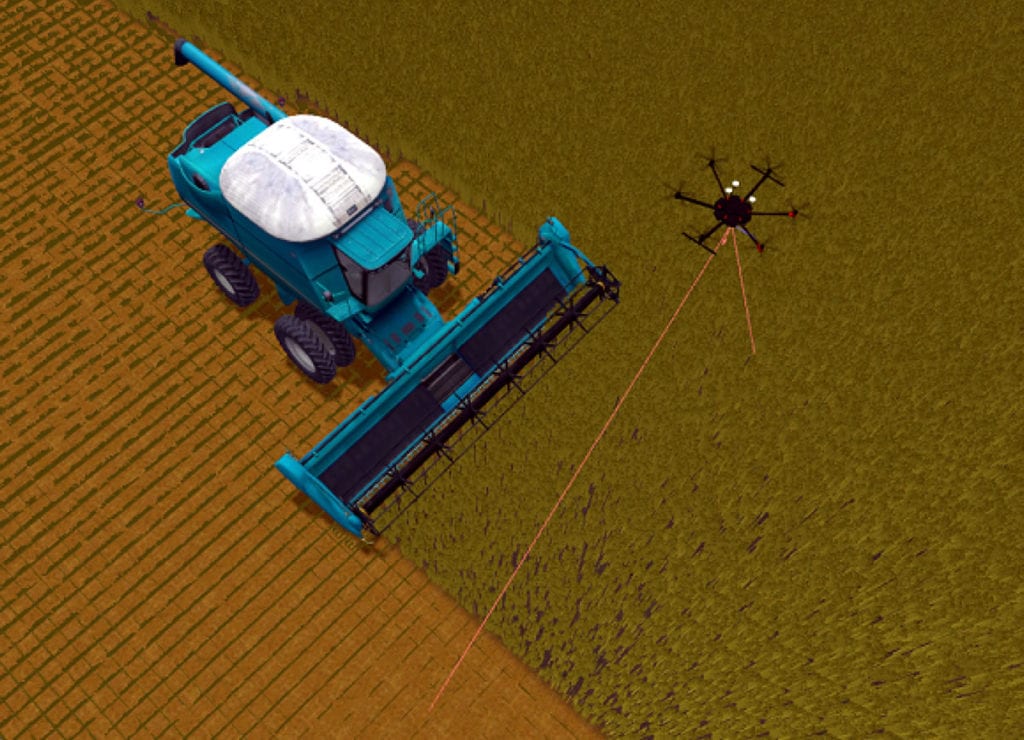

Automated harvesting process, with the unmanned aerial vehicle (UAV) in obstacle detection mode.

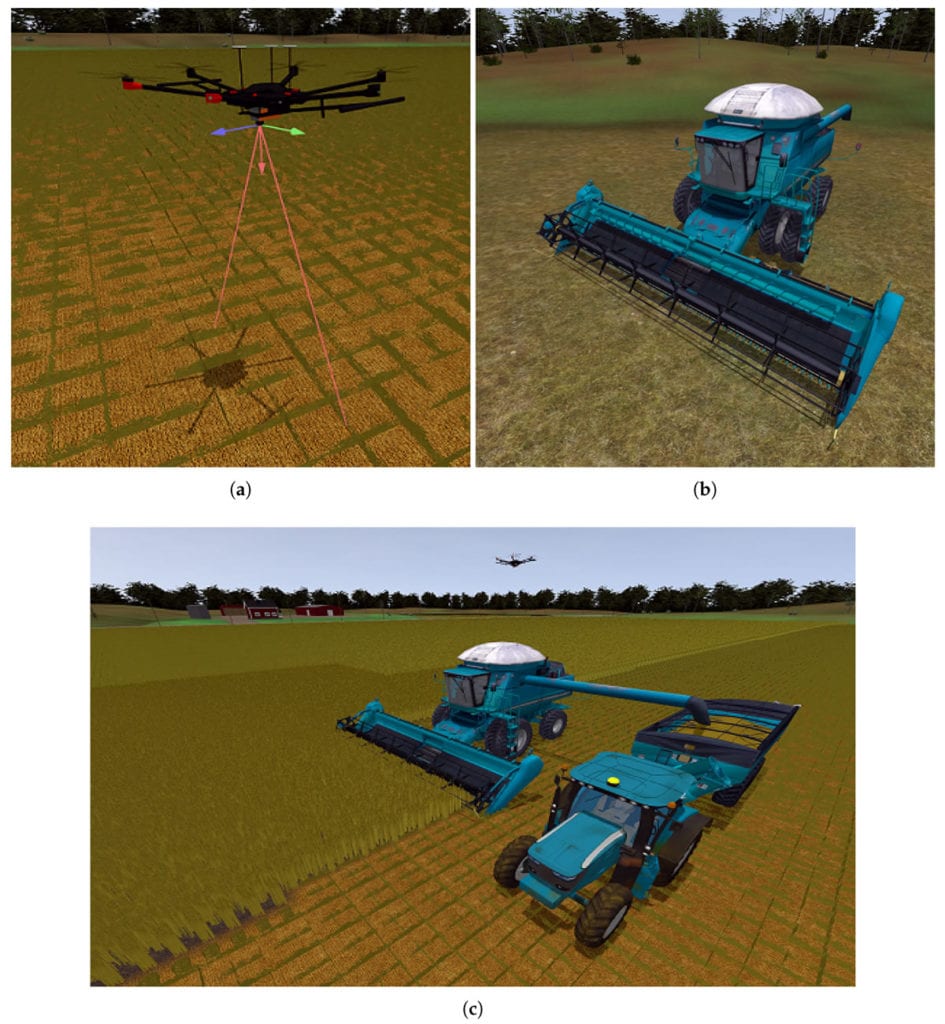

Farming simulation environment and machinery representation. (a) Drone representation; (b) combine-harvester representation; (c) drone, combine-harvester and tractor representation, together with a simulated farming environment.

The virtual environment developed in this work offers the possibility of simulating and stimulating the sensor, virtually creating hyperspectral images as they are captured by the targeted hyperspectral sensor and applying the processing algorithms to them in order to obtain the results straight away. In this way, before performing a real testing with the whole system, simulations can be run several times making modifications in the code and/or in the input parameters. This shortens the testing phase and reduces the required overall manpower required, what in the end translates into considerable cost savings in the whole system implementation.

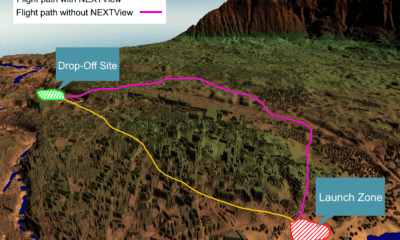

The research presented in this paper has been demonstrated within the frame of a use case of a European H2020 ECSEL project named ENABLE-S3 (European Initiative to Enable Validation for Highly Automated Safe and Secure Systems). The goal for this particular scenario called “Farming Use Case”, is the automated driving of a harvester in an agricultural field performing the cropping, watering or pesticide spraying tasks. Initially, a drone equipped with a hyperspectral camera inspects the terrain in order to generate a set of maps with different vegetation indices which give the information related to the status of the crop. This information, together with the specific coordinates, is then sent to the harvester, which initiates its autonomous driving to the region of interest and starts its labour. Additionally, in order to minimize the risk of fatal accidents due to agricultural mowing operations and/or collisions with big obstacles (animals or rocks), the scheme has been considered, in which the drone flies at a certain height above ground, and some meters ahead of the harvester (security distance) scanning the field for the possible appearance of static or dynamic objects. Hence, due to the criticality of the decisions that have to be made in real-time within this use case, it is recommendable to count with the proposed environment in order to exhaustively evaluate the behaviour of the whole system in a realistic way before operating in the field.

In order to cope with the requirements of the mentioned use case, the research team used the pre-existing agriculture graphical simulation environment (AgSim) which enabled virtually recreating the different scenarios considered within the ENABLE-S3 farming use case, introducing different machinery such as a harvester, a tractor, and a drone onto a farming field. More precisely, the system that has been simulated is the one presented in and is based on a Matrice 600 drone and a Specim FX10 hyperspectral camera. The proposed environment is able to simultaneously test the drone control routines together with the capturing and processing of the hyperspectral images. This software in the loop testing setup permits the validation and verification of different hyperspectral image processing algorithms such as image generation, vegetation indices map creation and target detection. Another highlight is that the proposed tool has been conceived in a modular way, hence different real time processing algorithms can be tested or different hyperspectral sensor characteristics can be used, just by replacing the corresponding module.

Conclusions

The validation and verification (V&V) process during the development cycle of any commercial product represents a high percentage of its success as they define the product functionality and its reliability. Autonomous systems require a tough battery of testing in different scenarios in order to ensure low risk affecting humans and/or costly equipment. In the particular case of UAVs, climate (mainly wind and rain) is unpredictable and affects the performance of these flying platforms. A new level of difficulty is introduced depending on the target application. For example, using UAVs and spectral sensors in precision farming means dealing in some cases with temporary croplands which in the worst case feature a one-year growing cycle. In order to deal with all of these variables, in this work a hyperspectral simulation environment has been presented to analyze the virtual behaviour of a UAV equipped with a pushbroom hyperspectral camera used for harvesting applications. As result, this tool alleviates the process of testing different phases involved in the automation of future farming such as image generation, health status inspection and target detection.

For future research in this field the research proposes that in order to enable an even closer representation of real scenarios with additional distortions, testing the algorithms in tougher conditions perturbations from wind and other climate phenomena should be brought into the physical model of the AgSim environment.

Citation: A Simulation Environment for Validation and Verification of Real Time Hyperspectral Processing Algorithms on-Board a UAV, Pablo Horstrand, José Fco. López, Sebastián López, Tapio Leppälampi, Markku Pusenius and Martijn Rooker, Remote Sens. 2019, 11(16), 1852; https://doi.org/10.3390/rs11161852