News

Scene 3D Sketching for Drones

Unmanned Aerial Vehicles (UAVs) have begun to gain popularity in industry, especially given that autonomous and semi-autonomous drones have now been developed and are being deployed in many applications, ranging from military, search and rescue operations, and even agriculture.

Computer vision is the field that deals with computers developing an ability to develop, understand, and recreate objects that it captures in the form of images. In UAVs, it finds its application in 3D scene reconstruction, which involves the formation of 3D objects purely from multiple images of the object taken from different angles.

New research, titled ‘Scene Wireframes Sketching for Drones‘, has been presented at the XoveTIC Congress in A Coruna, Spain to be held on the 27th and 28th of September, 2018. The authors of this paper are Roi Santos, Xose M. Pardo, and Xose R. Fdez – Vidal, who belong to CITIUS situated in A Coruna, Spain.

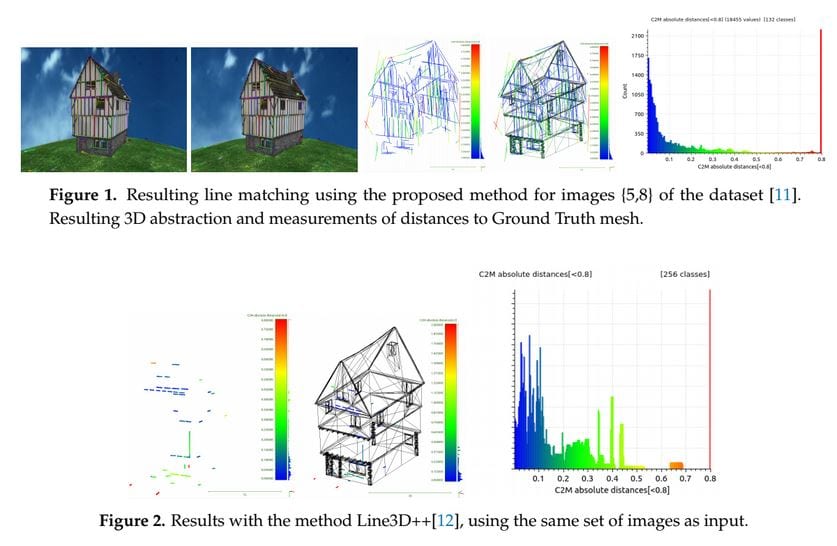

Their research sets out to obtain a real-time, three dimensional representation of a scene by using a limited number of matched segments. This new approach is then tested using images obtained from the public Ground Truth dataset. The results are compared to the output of the existing method Line3D++, with the conclusion that the proposed method is able to obtain a number of structures of the house from a low number of images, while still achieving decent accuracy. The method Line3D++ on the other hand, returns sparse short segment, at the same time failing to retrieve any long segment of the house for this test case.

With the increasing use of UAVs inside buildings and around man-made structures there is a need for more accurate and comprehensive representation of their operation environments. Drones are therefore increasingly required to have the capability of reconstructing their surroundings in 3D using images captured from multiple angles. Several methods exist to accomplish this, however research is ongoing to improve upon the existing methods or develop new, more accurate methods. Most of the 3D scene abstraction methods use a method called point matching, however the point ‘cloud’s formed using this method do not concisely represent the structure of the environment.

Likewise, ‘line’ clouds formed of short and redundant segments with inaccurate directions limit the understanding of scenes because they have poor texture, or whose texture resembles a repetitive pattern. Therefore, a much more complete and accurate method is required that can increase the degree of complexity and detail in the recreated 3D scenes, and this is what the paper sets out to do.

The approach of the author makes use of multi-scale line detection and matching to increase the accuracy of the line endpoints triangulation among pairs of line-matched frames. Moreover, it also goes one step ahead in the least squares adjustment of cameras and lines by exploiting geometrical relationships of the coplanar lines. Once the spatial lines are classified based on their co-planarity, the intersection of the lines are brought into a second run of the process.

This work presents a novel integration of a set of algorithms to create a line-based spatial sketch, showing the main structures of the man-made environment laying in front of a camera. It gets as input its intrinsic parameters and at least 3 pictures. The set of methods include novel observation relations of groups of straight segments that are captured from different poses. Quantitative results have been obtained and compared with other state-of-the-art line based SfM method. Future work might include the exploitation of weak epipolar constraints during the line matching process.

Citation: Santos R, Pardo XM, Fdez-Vidal XR. Scene Wireframes Sketching for Drones. Proceedings. 2018; 2(18):1193. | http://www.mdpi.com/2504-3900/2/18/1193