AI

Autonomous Drones Can Access Areas Inaccessible or Too Dangerous for Humans

Researchers have been developing first-responder friendly autonomous technology in order to save victims lives at the earliest while ensuring minimal harm to human rescuers.

However the main challenge is making Artificial Intelligence (AI) equipped machine- a drone for example identify and report back the correct status and number of victims. This is precisely what Vijayan Asari Professor of Electrical and Computer Engineering, University of Dayton is trying to find a solution for as per reports from the World Economic Forum blog.

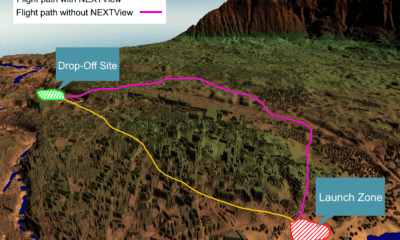

Imagine drones as first responders at the scene of a natural disaster struck area- like a flood or earthquake, or a human-caused one like a mass shooting or bombing. During the recovery after the deadly Alabama tornadoes, for instance the drones required individual pilots to remotely control unmanned aircraft. This might delay the review and result in the aid getting delayed.

At the University of Dayton Vision Lab, researchers led by Prof. Vijayan Asari are working on developing systems that can help spot people or animals – especially ones who might be trapped by fallen debris. Their technology mimics the behavior of a human rescuer, looking briefly at wide areas and quickly choosing specific regions to focus in on, to examine more closely.

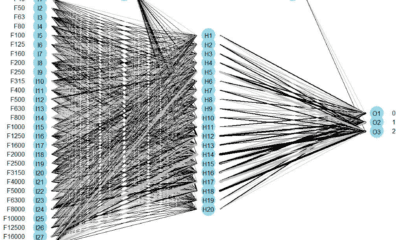

Given that disaster struck areas are often cluttered with downed trees, collapsed buildings, torn-up roads making spotting victims more difficult, Asari’s research team developed an artificial neural network system that can run in a computer onboard a drone. This system can emulate working of human vision to an extent- by analyzing drone captured images and communicating notable findings to human supervisors.

First, the system processes the images to improve their clarity. Similar to how humans squint their eyes to adjust their focus; the technology takes detailed estimates of darker regions in a scene and computes lightened images. In case the images are too hazy or foggy, the system reduces the whiteness of the image to enable viewing the actual scene more clearly.

When raining for example, the human brain focuses on the parts of a scene that don’t change – and compares them with the ones that do, as raindrops continue to fall– so people can see reasonably well despite rain. This technology uses the same technique of continuously investigating the contents of each location in a sequence of images to get clear information about the objects in that location.

The technology can also make images from a drone-borne camera larger, brighter and clearer by expanding the size of the image allowing both algorithms and people to see key features clearly.

In this nonlinear image enhancement process, the researchers simultaneously illuminate dark shadowy regions of the scene as well as compress (dim) over-exposed regions. In the images below, the results of this process can be observed. Note the improved visibility in the shadowy region of the parking lot.

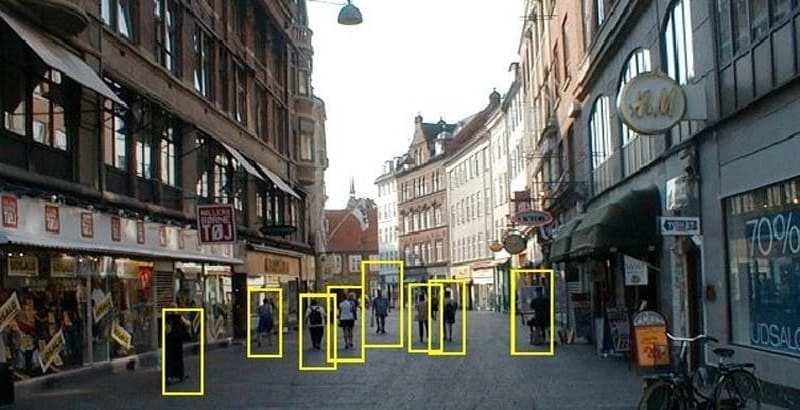

The system can identify people in various positions, such as lying prone or curled in the fetal position, even from different viewing angles and in varying lighting conditions.

The human brain can look at one view of an object and envision how it would look from other angles. This technology too computes three-dimensional models of people – either general human shapes or more detailed projections of specific people. Those models are used to match similarities when a person appears in a scene.

The system can also be trained to detect and locate a leg sticking out from under rubble, a hand waving at a distance, or a head popping up above a pile of wooden blocks. It can differentiate a person or animal from a tree, bush or vehicle.

This autonomous technology is able to search the area to identify the most significant regions in the scene. Then it investigates each of the selected regions to obtain information about the shape, structure and texture of objects there. Upon detecting a set of features that matches a human being or part of a human, it flags that as a location of a victim. The drone also collects GPS data about the object’s location, and senses how far it is from other objects being photographed enabling calculation of the exact location of each person in need of assistance, and alerts rescuers accordingly.

The entire process – capturing an image, processing it for maximum visibility and analyzing it to identify trapped or concealed people takes about one-fifth of a second on the normal laptop computer that the drone carries, along with its high-resolution camera.

The U.S. military has shown interest in this technology. The team has worked with the U.S. Army Medical Research and Materiel Command to find wounded individuals in a battlefield. This work has been adapted to serve utility companies looking for intrusions on pipeline paths by construction equipment or vehicles that may damage the pipelines. With several applications like these this human vision simulating drone technology is a boon for utility companies involved in pipeline pathways and similar industry that requires constant monitoring/ detection.