News

Deep Learning-Based Real-Time Multiple-Object Detection and Tracking via Drone

Deep Learning-Based Real-Time Multiple-Object Detection and Tracking via Drone

Target tracking has been one of the many popular applications that an unmanned aerial vehicle (UAV) is used for, in a variety of missions from intelligence gathering and surveillance to reconnaissance missions. Target tracking by autonomous vehicles could prove to be a beneficial tool for the development of guidance systems- Pedestrian detection, dynamic vehicle detection, and obstacle detection too and can improve the features of the guiding assistance system. An aerial vehicle equipped with object recognition and tracking features could play a vital role in drone navigation and obstacle avoidance; video surveillance, aerial view for traffic management, self-driving systems, surveillance of road conditions, and emergency response too.

Target detection capacity in drones has made stupendous progress off late. Earlier, target detection in drone systems mostly used vision-based target finding algorithms. Finding a target for instance was done by a Raspberry Pi and OpenCV.

Now, researchers have proposed an effective method for target detection and tracking from aerial imagery via drones using onboard powered sensors and devices based on a deep learning framework.

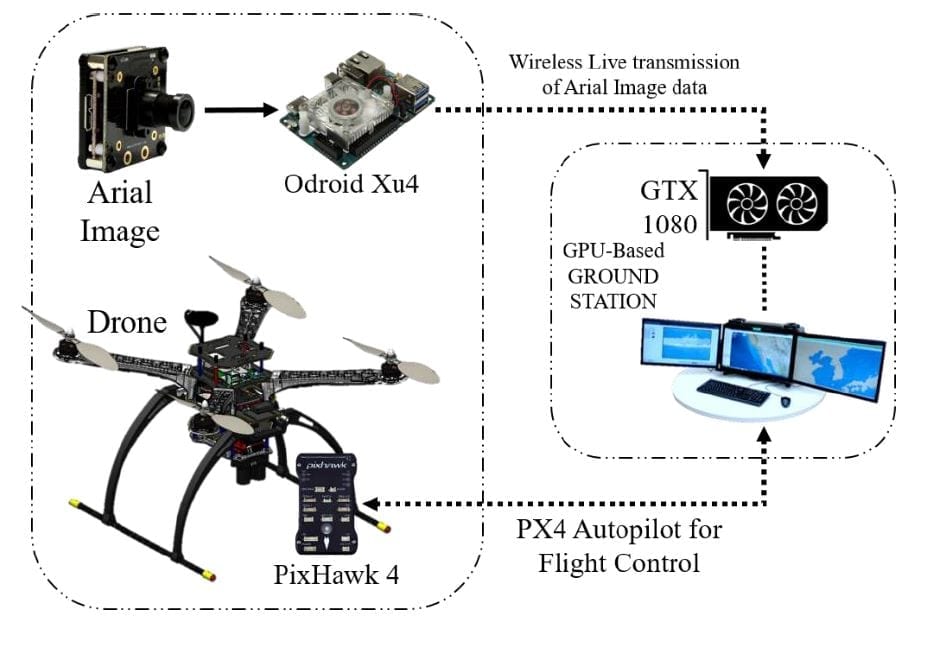

They developed two types of embedded modules: one was designed using a Jetson TX or AGX Xavier, and the other was based on an Intel Neural Compute Stick. A comparative analysis of current state-of-the-art deep learning-based multi-object detection algorithms was carried out utilizing the designated GPU-based embedded computing modules to obtain detailed metric data about frame rates, as well as the computation power.

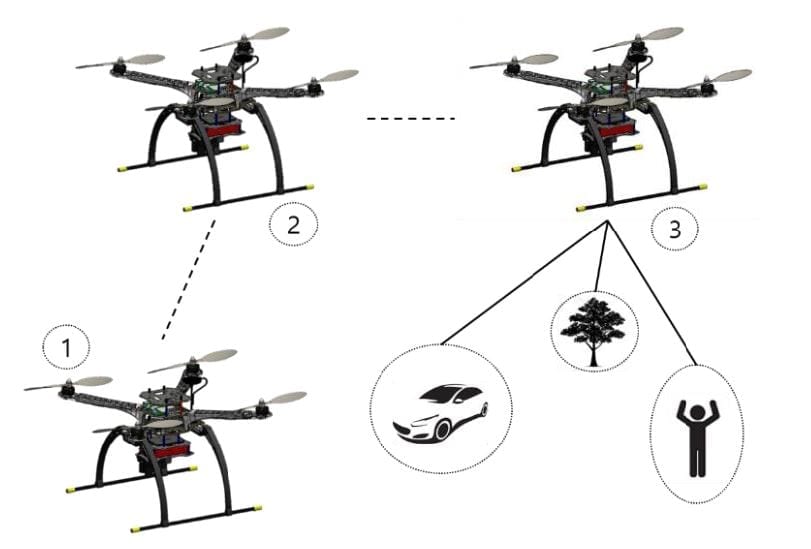

3D CAD design of the external structure of an aerial vehicle with a camera mounted on it.

In this study the researchers used various algorithms and a different embedded system to execute the algorithms- the deep learning drone project used a Jetson GPU and faster R-CNN to detect and track objects. In their paper submitted on this study researchers discuss an on-board and off-board system that was developed for an aerial vehicle using well-known object detection algorithms. The paper presents the 3D CAD design of the aerial vehicle which comprised:

- On-board embedded GPU system

- Off-board GPU-based ground station

- On-board GPU-contrained system

The project aimed to add object tracking to You only look once (YOLO)v3 – a fast object detection algorithm and achieve real-time object tracking using simple online and real-time tracking (SORT) algorithm with a deep association metric (Deep SORT). YOLO is an apt choice when real-time detection is needed without loss of too much accuracy. YOLO uses a single CNN network for both classification and localizing an object using bounding boxes.

The portrayal of the aerial vehicle looking for a target with a drone taking off from the ground, following a designated waypoint, and looking for objects.

The study also introduced effective target tracking approach for moving objects by an algorithm based on the extension of SORT. It was developed by integrating a deep learning-based association metric approach with SORT (Deep SORT), which uses a hypothesis tracking methodology with Kalman filtering and a deep learning-based association metric. A guidance system that tracks the target position using a GPU-based algorithm was also introduced. Last but not the least the team also successfully demonstrated the effectiveness of the proposed algorithms by real-time experiments using a small multi-rotor drone.

Target detection using the GPU-based ground station from an aerial vehicle.

The algorithm integrates appearance information to enhance the efficiency of SORT thus, allowing tracking objects for a longer time through visual occlusions. It also effectively reduced the number of identity switches by 45%. According to the research paper, experimentation also showed overall performance at high frame rates.

Conclusion: As per the research team it was evident from the experiments on different GPU systems that multiple types of contemporary target detection algorithm performed very well in Jetson Xavier. Jetson TX1 is ideal when using a small weight or model like YOLOv2 tiny. Jetson Tx2 is a moderate GPU system that showed outstanding results in the case to YOLOv2 and SSD-Caffe. Among the three on-board GPU-constrained systems, Odroid XU4 with NCS showed better performances. The study also presented the algorithm procedure for tracking with the respective embedded system along with the runtime, GPU consumption, and size of the platform used for the experiment.

Target detection results of the GPU-based ground system.

Notably, the guiding algorithm to follow the target for a person worked less than 20 m away from the target since person detection using YOLOv2 did not work after that limit. The guiding algorithm was purely based on the detection result and the coordinates of the bounding boxes. This algorithm had a limitation: When it faced multiple targets of a similar class, it chose Sensors 2019, 19, 3371 22 of 25 randomly in between them to track. According to the researchers further research is needed to make the algorithm more robust for such scenarios.

The research team comprised Sabir Hossain and Deok-jin Lee with support from School of Mechanical & Convergence System Engineering of the Kunsan National University at Korea.

Citation: Deep Learning-Based Real-Time Multiple-Object Detection and Tracking from Aerial Imagery via a Flying Robot with GPU-Based Embedded Devices, Sabir Hossain and Deok-jin Lee, School of Mechanical & Convergence System Engineering, Kunsan National University, 558 Daehak-ro, Gunsan 54150, Korea, Sensors 2019, 19(15), 3371; https://doi.org/10.3390/s19153371, https://www.mdpi.com/1424-8220/19/15/3371

How useful was this post?

Click on a star to rate it!

Average rating 4.1 / 5. Vote count: 7

No votes so far! Be the first to rate this post.

We are sorry that this post was not useful for you!

Let us improve this post!

Tell us how we can improve this post?