News

Method to Estimate Human Poses in Real-Time via Drones

Traditional multi-view techniques

Videogame models, sci-fi characters in movies and humanoid models in sports analyses are all developed using photogrammetric or scanning techniques that rely on cameras and image processing with careful programming that governs the dimensions and profiles of the bodies scanned and modeled. These systems generally rely on body-worn markers, although some recent renditions can function without the need for a special marked suit. Multi-view approaches can be highly accurate in their functionality and sometimes provide dense surface reconstructions.

The problem with traditional techniques

While traditional methodologies can be very accurate, they are still constrained by the fact that most of them require a set of environment-mounted cameras that are caliberated very carefully to record one particular location. Due to this reason, these techniques are highly dependent on the environment under which they function which makes them extremely costly for developers and producers and entirely infeasible for certain projects.

Environment independent approach

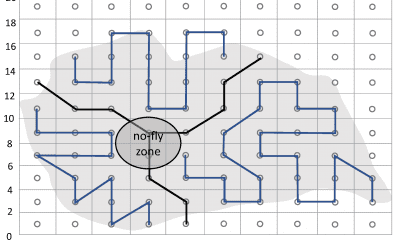

Addressing or removing constraint imposed by environmental and architectural limitations on the multi-view techniques altogether is a crushing challenge to be answered by developers. One research publication titled, ‘Flycon: Real-time Environment-independent Multi-view Human Pose Estimation with Aerial Vehicles‘, by researchers at ETH Zurich and Delft University of Technology, addresses this problem as the researchers propose an environment-independent approach to multi-view human motion capture that leverages an autonomous swarm of micro aerial vehicles (MAVs), or drones. The approach leverages a swarm of camera equipped flying robots and jointly optimizes the swarm and skeletal states, which include the 3D joint positions and a set of bones.

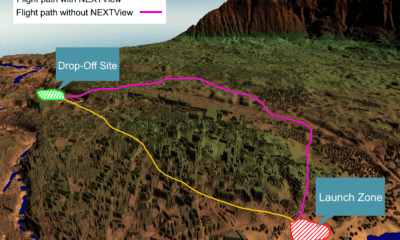

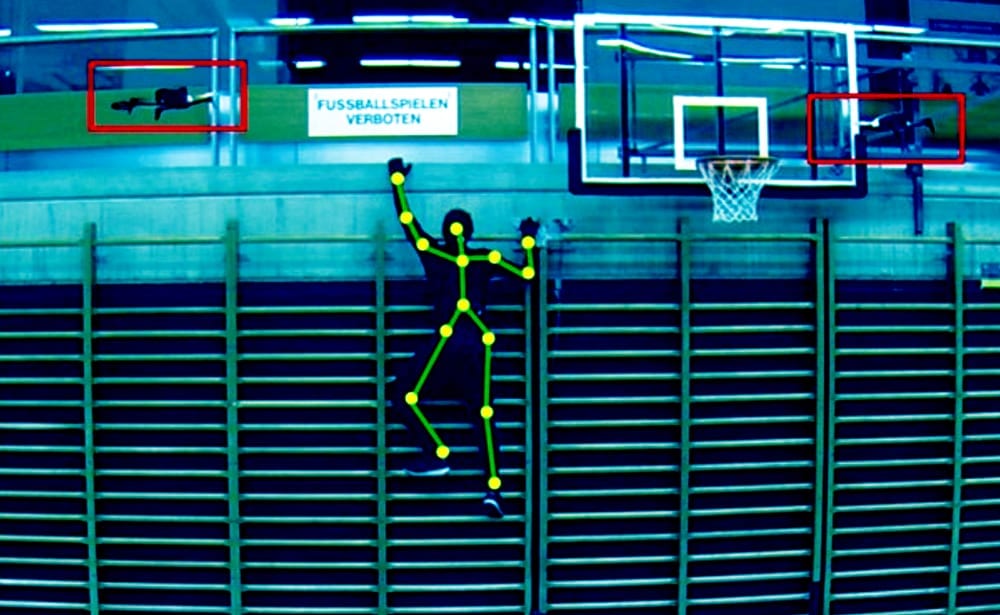

Novel method for environment-independent estimation of human poses in real-time. The researchers demonstrate the proposed system in a number of compelling outdoor (left) and indoor (middle) experiments. They estimate the positions of a quadrotor swarm as well as the full human pose in real-time. A model predictive controller computes optimal quadrotor inputs to follow the human and always keep the markers visible (middle). This method can accurately estimate articulated motion over long time frames and distances, In the right figure we show the accumulated joint positions, relative to the center of the person, over a 170m long walking sequence.

The newly developed method allows real-time tracking of the motion of human subjects. For example an athlete, over long time horizons and long distances, in challenging settings and at large scale, where fixed infrastructure approaches are not applicable.

The proposed algorithm uses active infra-red markers, runs in real-time and accurately estimates robot and human pose parameters online without the need for accurately calibrated or stationary mounted cameras.

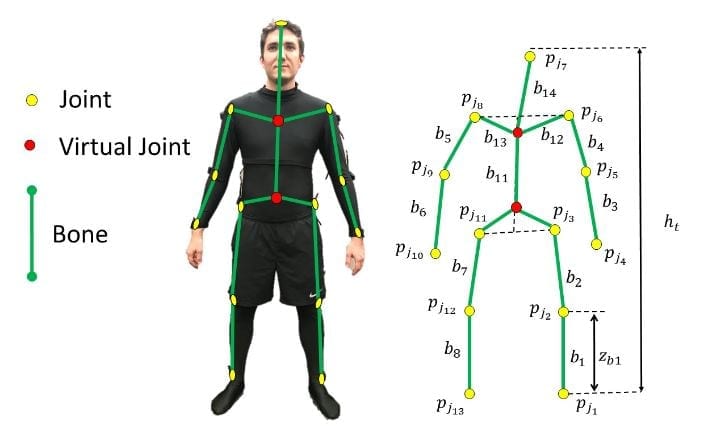

Schematic of the states used to model the human skeleton. The estimated skeleton constists of 13 real joint markers (yellow), two virtual markers (red) and 14 bones (green). The virtual markers are computed using the physical markers and are introduced for better bone length estimates.

What it does

As per the publication, the developed algorithm effectively does the following tasks,

- Estimates a global coordinate frame for the MAV swarm.

- Jointly optimizes the human pose and relative camera positions.

- Estimates the length of the human bones. The entire swarm is then controlled via a model predictive controller to maximize visibility of the subject from multiple viewpoints even under fast motion such as jumping or jogging.

Challenges addressed by this new approach

Moreover, the algorithm addresses a number of challenges by adding to its processing such as:

- The 3D joint locations move in an articulated non-rigid fashion as opposed to traditional localization approaches that form rigid scene assumptions. Accounting for non-rigidity makes the system processing independent of an assumption, increasing the systems accuracy.

- Instead of using fixed cameras, this new approach uses cameras mounted on drones or small aircrafts. This helps the cameras adjust their positioning according to the subject under scanning so the camera configuration dynamically adjusts relative to the position and state of the human. This is much preferable to stationary cameras since human poses are not estimated in this approach but are rather recorded in real-time accurately.

- The proposed approach does not depend on any sort of external signal, such as GPS for positioning. This expands the field of application of this approach as it functions independently of these signals and can, for example, be used in locations where GPS is not helpful, such as indoors.

- This method enables motion capture in previously difficult or entirely infeasible scenarios such as continuously reconstructing the full body pose of an athlete throughout an entire workout or capturing actors in remote and difficult to reach locations, for example while climbing.

- The publication proposes a completely self-contained method for the joint estimation and control of the states of multiple MAVs and of 3D human skeletal configuration. The proposed algorithm runs in real-time and accurately estimates the positions of the robot swarm and the human pose parameters. Moreover, real time computation of drone trajectories keeps the cameras focused on the subject under scanning.

Experiments and demonstrations

In one of the four experiments, a participant performing jumping jacks was analyzed with drones that were controlled by processors programmed by the algorithm developed by the researchers. The frequency or shutter speed of the camera limited the velocity of the limbs observed.

However, the position tracked by the algorithm is incredibly accurate as apparent from the 3d link model developed as shown in the image.

Citation: Flycon: Real-time Environment-independent Multi-view Human Pose Estimation with Aerial Vehicles – https://ait.ethz.ch/projects/2018/flycon/downloads/flycon.pdf